DOLBY ATMOS - A BEGINNERS GUIDE

Dolby Atmos appears to be the next "big thing" in audio reproduction. In this beginners' guide our technical manager, Tom Shorter, covers the basics and explain the pros & cons of this groundbreaking new audio format...

Dolby Atmos offers a completely immersive listening experience with sound moving around you in a 360 degree space – and if you’ve ever sat in an Atmos-equipped room it’s almost impossible not be impressed by the results. However, the chequered history of attempts to introduce new audio standards is peppered with far more Betamax than VHS. Stereo sound became ubiquitous in the late 50s. Despite all the new audio formats introduced in the intervening 70 years, music is still listened to on a pair of headphones or two speakers for pretty much everything ever made.

It’s little surprise then that the market-hardened staff of KMR tend to be a little reserved when the latest in a long line of would-be visionaries introduces us to the most recent “revolutionary” format. It’s like 7.1 surround all over again. Which was like 5.1 all over again.

So why would Atmos be any different? Well, we’re not sure that it is just yet. But the advent of object-based mixing instead of channel-based is a fundamentally different approach with far reaching ramifications. And with Apple seemingly throwing the considerable weight of their Apple Music streaming platform behind it, now seems the right time to see what all the noise is about.

What is "Object Based Mixing"?

With a conventional stereo bounce (Channel-Based Mixing or CBM), you take a number of mono and stereo tracks and sum them together to create a stereo mix file. Once this is done, that mix is locked in place and you're left with just the two interleaved files forever.

With Object Based Mixing (OBM), the key difference is that your sources remain discrete and aren't merged into fixed output files. Instead, they are encoded as individual objects and assigned a position within a 3D space. Because this space is virtual and of a fixed shape, the position of that element can be applied as easily to a small home entertainment room as to a cinema, a stadium, or even just down to stereo playback.

"Objects" and "Beds"

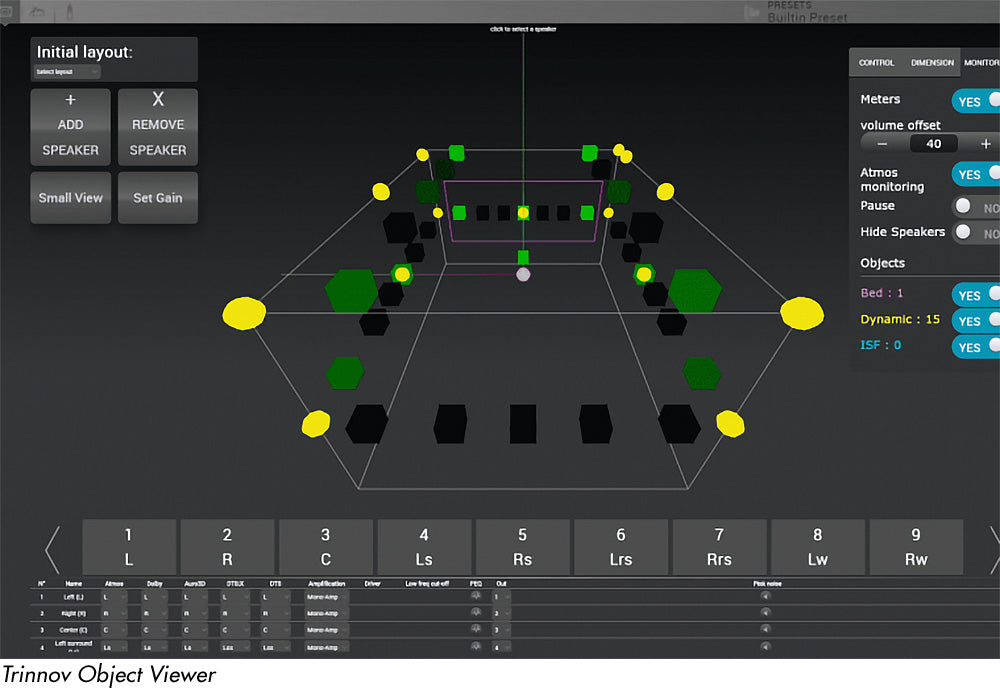

An object refers to any single discrete element in a mix - eg. a kick drum, guitar, vocal etc. Think of it as Atmos' equivalent of a channel. An object can be moved around freely inside the 3D space, including automation of position, height and size.

A bed is used to send sources to specific channels within the array. For example, if you had a stereo recording of a piano, you might then decide to send those two sources to just the L and R channels. Within the mix, you can have beds that output in any number of configurations including standards like 5.1 and 7.1. It’s also useful from a processing perspective as you can treat the bed as a single part.

What's the easiest way to get into Atmos?

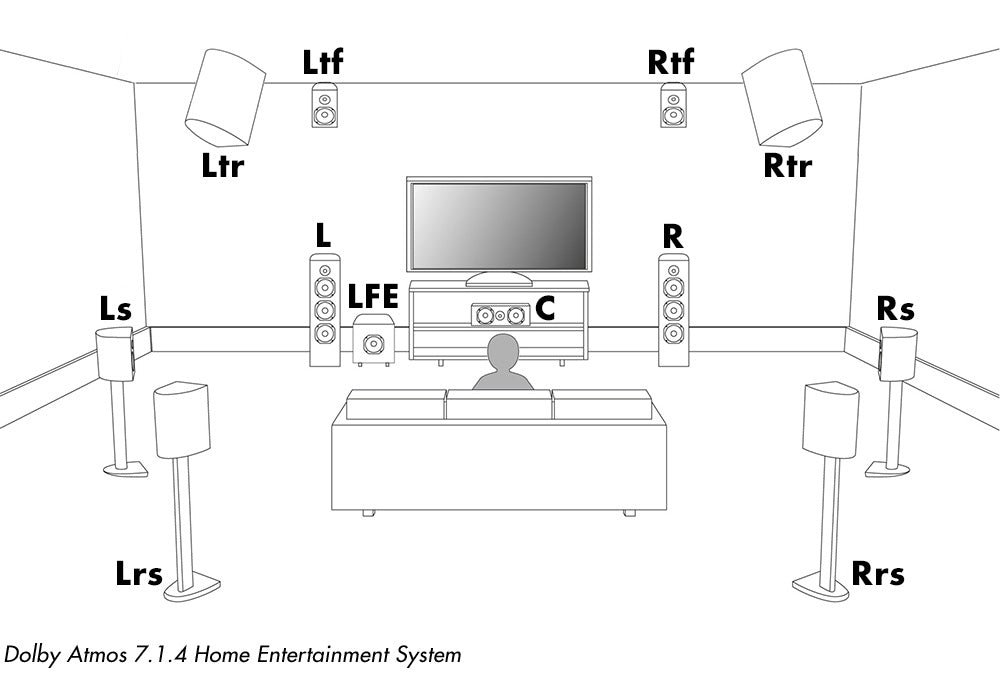

Home Entertainment is probably Dolby's largest target audience. For Atmos, the minimum number of physical output channels for monitoring is 12, making a 7.1.4 system. This meets the “Home Entertainment” requirement which means you can mix for Netflix and Amazon and general small-screen TV content. More notably, this is what we use for music.

To offer a massive oversimplification, here's the minimum system requirements:

- An interface capable of outputting 12 channels with ganged level control for each channel

- 12 speakers; Left, Centre, Right, Left Surround, Right Surround, Left Rear Surround, Right Rear Surround and 4 Height channels above the listener, arranged in a square with the mix position in the centre.

- A way to time align all of these speakers and, if possible, calibrate them for the best possible full range output.

- A filter matrix on the outputs for applying the Dolby Curve.

By way of example, here's a possible configuration that would work in a small room...

- Avid HD Native Thunderbolt

- Avid MTRX Studio

- 3x Neumann KH310 (LCR)

- 4x Neumann KH120 (Ls, Rs, Lrs, Rrs)

- 4x Neumann KH80 (Ltf, Rtf, Ltr, Rtr)

- 2x Neumann KH750 (LFE, clustered)

Apply the Dolby Curve

After your speakers are bolted to the walls and ceiling and are time aligned and calibrated, you'll then need to apply Dolby's curve to the system. The intention of the curve is to provide a common reference point between all Atmos applications. Because the RT60 (a measurement of the time it takes for the loudest noise in a concert hall to fade to the background level) of a small room would generally be much longer than a large treated theatre, the additional build up of bass skews the mix. The curve pulls out a carefully calculated amount of the low end in order to bring the perceived low end output of a small space in line with a full theatrical playback system.

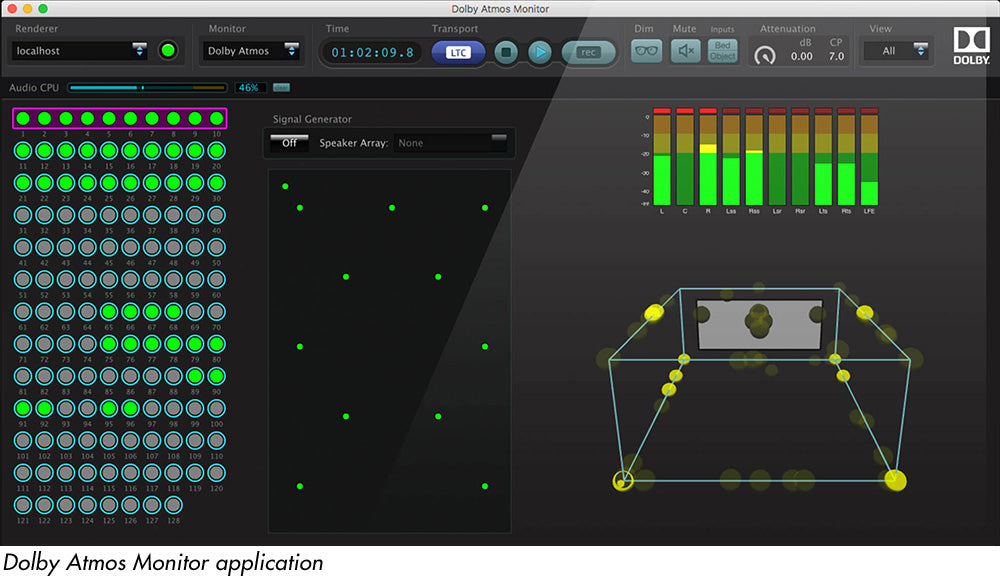

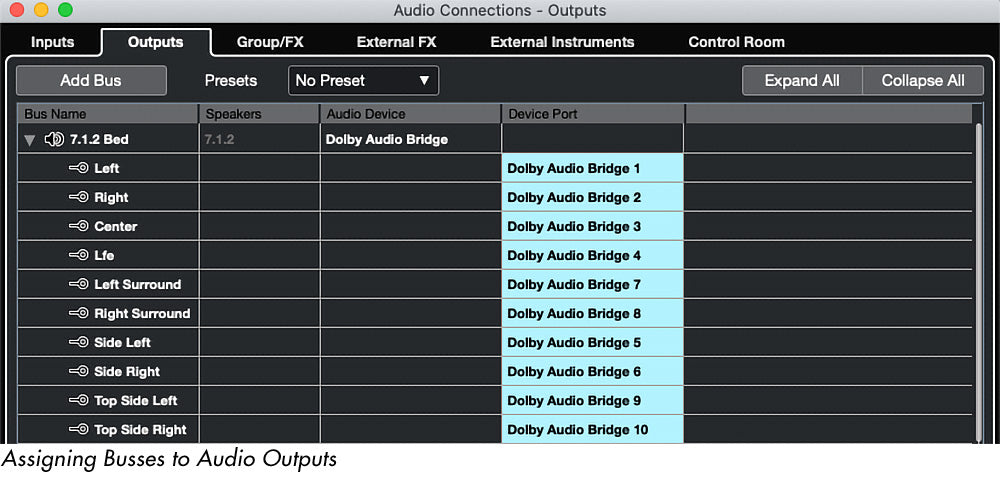

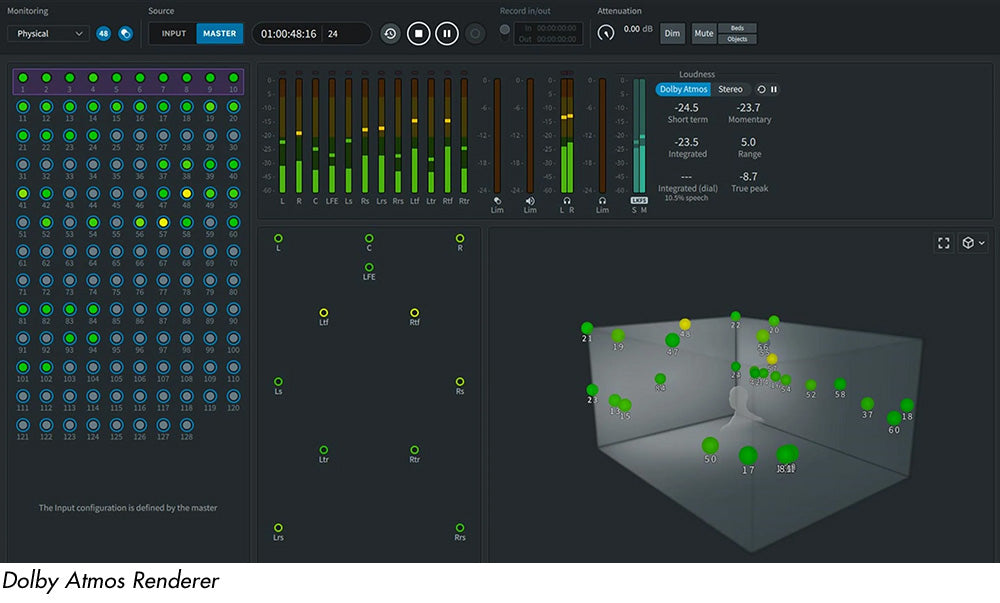

Renderer vs. RMU

To get the audio out of Pro Tools and into Atmos, there has to be an intermediary encoding process. This can take two forms; either you can use Dolby Production Suite, which uses its own Audio Bridge to replace the playback engine within Pro Tools before addressing your output device directly, or you can use an external Rendering and Mastering Unit (RMU). The external units are normally approved standalone PCs, usually a Dell or Mac Mini, which run the software. We see RMUs come in to play more with broadcast applications but in music we're mostly seeing mixers using the production suite.

Setting a standard

From the above, and our general travels in Atmos-land, it appears that Dolby's goal was to apply a ubiquitous format that could work as well in a large theatre as it would on headphones. While I think the bones are in the right place, there are still some shortcomings in the standardisation that need clarification before the audio world can fully embrace it. The format is still heavily influenced by it's theatrical background and much of the documentation refers to elements that have no relevance to music makers. That said, there does seem to be a rapidly emerging convention of best practice. Intrepid early adopters have already worked through the pitfalls of the non-specificity of the guidelines and there are now some generally accepted rules. What's more, the labels have pretty specific delivery requirements where they even prescribe certain object placements and the general ethos toward approaching OBM.

Things to watch out for...

1. Bass management needs improving

In the context of Dolby, LFE refers to Low Frequency Effects, not enhancement. This channel was designed solely for making the floor shake when helicopters explode and, so far, seems to have next to no application for music makers.

Dolby specification dictates that the LFE can cross over at anything up to 250hz and has to have an additional 10dB on-band gain over the rest of the system. Bearing in mind the reference level for a home entertainment monitoring system has to hit 79dBC, that's an extremely overspecified sub for a system of this size! The lack of specificity on the crossover point also means that your system might be crossing over at a sensible 80hz and the studio next door could be using that full, eye-watering 250hz. Needless to say, any bass-managed object would not translate between these two systems. It gets worse... with the higher crossover points especially, as you pan the object to the rear, the source of the LF information remains at the front, because that's where the sub is. So you'd end up with a single source split between front and rear, compromising both its coherency and directionality. However, all speakers in an Atmos system are supposed to be full range, so you should be able to install the sub to tick the box and then largely disregard it – the downside that you are effectively left with a rather expensive coffee table!

2. Dolby Digital Plus JOC vs. AC-4 IMS

Mix engineers have found this a key source of frustration during the format's early teething period. One of the biggest drivers for adopting the format was Apple's announcement regarding the revised delivery specification for their streaming platform. If you wanted to get on to Apple Music, you needed to deliver an Object Based Mix. Apple then quietly brought out an iPhone update, not only adding the Spatial Audio tickbox in settings but also making it enabled by default. This, weirdly, meant lots of consumers with Airpods were suddenly listening to Atmos without even knowing it. The coup instantly made iPhone users with Airpods by far the largest demographic for consuming 3D audio - and likely multiplied the amount of people listening to Atmos by a factor of thousands!

However, this brought with it an issue: Apple used the older AC-4 format for their Spatial Audio, whereas Dolby in their Renderers and RMUs use DD+ JOC. This is of note because the two don't fold to binaural in the same way. So, if you're mixing a track through your Dolby monitoring system and have it folded down to Binaural for a headphone mix check, it won't sound the same as it does on iPhone. The only way to get an idea of how your mix sounds on Airpods with an iPhone (again, by far the largest potential demographic), is to bounce the file as an MP4 and then play it back on an iPhone - not exactly slick. At time of publication, there is no way to monitor your mix as it would sound on iPhone in real time.

What's next for Atmos?

As suppliers of studio monitors - and much as we’d like to believe otherwise - we think it’s highly unlikely that consumers are going to install 12 speakers in their living rooms to watch YouTube videos immersively. Simply speaking, the significant hardware investment means Atmos is unlikely to become a ubiquitous consumer format. However, the application benefits for being object-based are significant. Being able to apply any production automatically to any size of space or playback system is nothing short of unprecedented. It’s inherent compatibility with existing stereo and even mono playback also sidesteps the need for multiple delivery codecs for different systems.

Dolby Atmos may well be a game-changer for the film and post-production industries, but for music reproduction the future is perhaps not quite so clear. It's worth bearing in mind that with 60,000 tracks being added to Spotify every day, it simply isn’t possible to replace the huge army of music creators out there with Atmos mixers overnight. So, while there is seemingly a fierce appetite for mixers and masterers that can deliver Dolby, I suspect the audio industry will end up in a place where the majority of content continues to be made in stereo and is then upmixed by a smaller community of Atmos engineers. Possibly even at the mastering stage.

Dolby Atmos is an exciting development and the end-user experience is unquestionably impressive. However, for the music production industry its success or failure will depend entirely on consumer demand. Let’s see where we are in 12 months time…

Useful links

DOLBY ATMOS MUSIC & POST KNOWLEDGE BASE: https://professionalsupport.dolby.com/s/dolby-atmos-music-and-post?language=en_US

AVID ATMOS DELIVERY GUIDE: https://www.avid.com/resource-center/encoding-and-delivering-dolby-atmos-music

DOLBY ATMOS STUDIO CONFIGURATION: https://developer.dolby.com/tools-media/studio-resources/Atmos-in-studio/

DOLBY ATMOS TECHNICAL GUIDE: https://dolby.my.salesforce.com/sfc/p/#700000009YuG/a/4u000000lFHc/UYA0IZeD632SUXVmEPmUcr.wIuhpHp6Q7bVSl4LrbUQ

Download a free trial copy of Dolby Production Suite here:

https://developer.dolby.com/forms/dolby-atmos-production-suite-trial/